Highly anticipated User Interfaces

Published on August 03,2017

Just a simple question; can you safely say claim that you can navigate and fire events in your windows PC just by using command prompt? I thought not. And because of this, the shift from text to graphics was indeed a major leap initiated by the founder of Apple, Steve Jobs, with his hallmark Macintosh operating system in 1984. I highly cherish the move, and so should you. Yet in recent years, we’ve also witnessed innovated UI that involve the use of touch (e.g. smartphones), voice (e.g. Siri) and even gestures (e.g. Microsoft Kinect). To establish an equilibrium of understanding, when I talk about user interface (UI) in computing, I am referring to how a computer program or system represents itself to its user, usually via graphics, text and sound. We’re all familiar with the typical Windows and Apple operating system where we interact with icons on our desktop with our mouse cursors. Prior to that, everything was rather done through the text-based command-line prompt. Nevertheless, never disguise your humble beginning, rather peer into and anticipate your coming future. Below is a walk into your soon-to-be-common future of advanced user interfaces.

Gesture interfaces

Time has come where spatial motions are detected so seamlessly. The enabling technology is majorly the advent of motion-sensing devices like Wii Remote in 2006, Kinect and PlayStation Move in 2010. User interfaces of the future might just be heading in that direction, where interactions with computer systems are primarily through the use of gestures (as portrayed by Tom Cruise in a 2002 science fiction movie, Minority report).

In this technology (gesture recognition), the input comes in the form of hand or any other bodily motion to perform computing tasks, which to date are still inputted via device, touch screen or voice. The addition of the z-axis to our existing two-dimensional UI will undoubtedly improve the human-computer interaction experience. Just imagine how many more functions can be mapped to our body movements. you can watch the demo video of g-speak

Tangible user interfaces (TUI)

What if you were to have a computer system that fuses the physical environment with the digital realm to enable the recognition of real world objects? You simply place an object on it and it recognizes the system. Such a system has been achieved by Microsoft through Microsoft Pixelsense (formerly known as Surface). This interactive computing surface can recognize and identify objects that are placed onto the screen. The physics is rather simple, light from objects are reflected to multiple infrared cameras hence allowing the system to capture and react to the items placed on the screen.

![]()

In an advanced version of the technology (Samsung SUR40 with Microsoft PixelSense), the screen includes sensors, instead of cameras to detect what touches the screen. On this surface, you could create digital paintings with paint brushes based on the input by the actual brush tip. The system is also programmed to recognize sizes and shapes and to interact with embedded tags e.g. a tagged name card placed on the screen will display the card’s information. Smartphones placed on the surfaces could trigger the system to display the images in the phone’s gallery onto the screen seamlessly.

Brain-computer interfaces

I only wish you knew how today’s most-celebrated theoretical physicist (Steve Hawkings) speaks to people. He suffers from autism, so he generally cannot speak to you via a word of mouth. He is a striking example that explains that our brains generate all kinds of electrical signals with our thoughts, so much so that each specific thought has its own brainwave pattern. As opposed to Hawkings case where these waves are mapped to sound, in brain-computer interfaces, these unique electrical signals are mapped to carry out specific commands so that thinking the thought can actually carry out the set command.

A striking device, the EPOC neuro headset has been created by Tan Le, the co-founder and president of Emotiv Lifescience. You can see the demo video. It looks like this UI may take a while to be adequately developed. While you wait, you can think about its potentials, a future where one could operate computer systems with thoughts alone. Just imagine immersing yourself in an ultimate gaming experience that responds to your mood (via brainwaves), the potential for such an awesome UI is practically limitless.

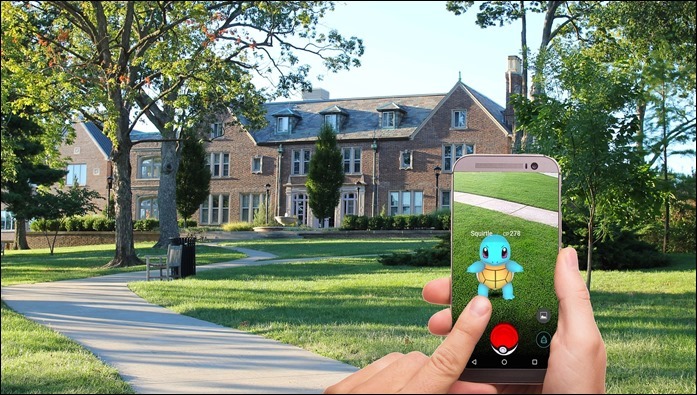

Augmented reality (AR)

Augmented Reality is anything, other than glasses, that is able to interact with a real-world environment in real-time. Picture a piece of a see-through device which you can hold over objects, buildings and your surroundings to give you useful information. For example, imagine having visited China for the first time and you come across a signboard with text inscriptions that you cannot understand. If you can look through the glass device to see them translated to your English for your easy reading, then that is AR.

AR can also make use of your natural environment to create mobile user interfaces where you can interact with by projecting displays onto walls and even your own hands. Check out how it is done with SixthSense, a prototype of a wearable gestural interface developed by MIT that utilizes AR. AR is getting the biggest boost in awareness via the existing Google’s Project Glass, a pair of wearable eyeglasses that allows one to see virtual extensions of reality that you can interact with. Here’s an awesome demo of what to expect.

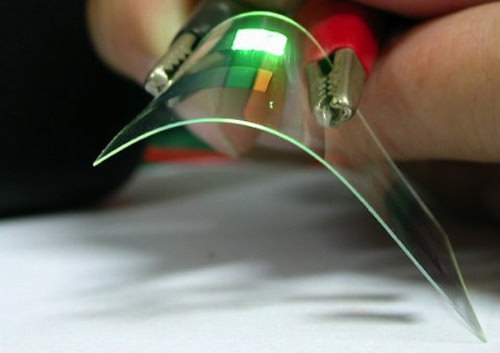

Flexible OLED display

Flexible OLED (organic light-emitting diode) displays is an organic semiconductor which can still display light even when rolled or stretched. Stick it on a plastic bendable substrate and you have a brand new and less rigid smartphone screen. Moreover, these new screens can be twisted, bent or folded to interact with the computing system within. Bend the phone to zoom in and out, twist a corner to turn the volume up, twist the other corner to turn it down, twist both sides to scroll through photos and more.

Such flexible UI enables us to naturally interact with the smartphone even when our hands are too preoccupied to use the touchscreen. This could well be the answer to the sensitivity (or lack thereof) of smartphone screens towards gloved fingers or when fingers are too big to reach the right buttons. With this UI, all you need to do is squeeze the phone with your palm to pick up a call.

Ionized Particle Display

This one is straight from my wonder land. If liquids molecules can be arranged in an orderly manner and an image produced through them as in Liquid crystal displays, then so can air particles. This is almost similar to plasma displays but instead of containing the ionized air particles in a containment, why not use the freely available air anywhere you want your screen to be, and however big you want it to be. Simply charge air particles in a defined area then display images through it.

Conclusion

Obviously, with these technologies comes the ‘Big Differentiator’, laziness. So dangerous are the consequences that the whole world warns you about them. But technology is like time. It cannot be killed, it has to come and pass and one day rendered obsolete, but still be remembered as a stepping stone. So keep watch of the seasons.