Denial of service (DoS) attacks explained

Published on March 23,2017

Let’s just say it is Monday night, and you are still in the office when you suddenly become aware of the whirring of the disks and network lights blinking on the Web server.

It seems like your company's Web site is quite well visited tonight, which is good because you are in e-business, selling products over the Internet, and more visits mean more earnings.

You decide to check it out too, but the Web page will not load. Something is wrong. Your network admin confirms, “A flood of packets is surging into our network.”

According to you, it’s a good thing, but the admin adds, “Your Company’s Web site is under a denial-of-service attack. It is receiving so many requests for the Web page that it cannot serve them all - it’s 50 times our regular load. Just like you cannot access the Web site, none of our customers can.”

Is the above business still living? No!

Society is getting more and more dependent on the reliability of the Internet. Were you to count the number of African businesses dependent on the Internet as their link to their customers, the number would be whooping large (just think, the monetary institutions, e-commerce sites, the large airline industry, shipping businesses, the e-government platforms and much more).

Customers are also being encouraged to do most of their business on the Internet.

Major critical infrastructures (public services such as transportation) are also connected to the internet. And in the event of such kind of an attack, your lives would be entirely interrupted. Many businesses will come to a halt, and so can many people lose what they treasure most, life.

If such were to occur, then you have just become one of the hundreds of thousands of victims of a denial-of-service attack, a pervasive and growing threat to the Internet.

Just what is a denial of service attack, and why should you care?

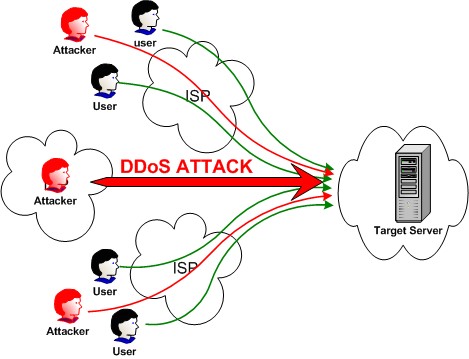

A DoS attack is very dissimilar to other forms of attack in its motives. In Distributed denial of service attacks (DDoS attacks), breaking into many computers and gaining malicious control of them is just the first step.

The attacker then moves on to the DoS attack itself, which has a different goal—to prevent victim machines or networks from offering service to their legitimate users. Mind you, no data is stolen, nothing is altered on the victim machines, and no unauthorized access occurs.

The victim simply stops offering service to normal clients because it is preoccupied with handling the attack traffic.

While no unauthorized access to the victim of the DDoS flood occurs, a large number of other hosts (technically stepping stones, handlers, and agents) have previously been compromised and controlled by the attacker, who uses them as attack weapons.

The two modes of DoS attack

The goal of a DoS attack is (generally) to disrupt some legitimate activity, such as browsing Web pages, listening to online radio, transferring money from your bank account, or even docking ships communicating with a naval port.

This denial-of-service effect is achieved by sending messages to the target that interfere with its operation and make it hang, crash, reboot, or do useless work.

One way to interfere with a legitimate operation is to exploit a vulnerability present on the target machine or inside the target application. The attacker sends a few messages crafted specifically to take advantage of the given vulnerability.

Another way is to send many messages that consume some key resource at the target, such as bandwidth, CPU time, memory, etc. The target application, machine, or network spends all of its critical resources on handling the attack traffic and cannot attend to its legitimate clients.

Of course, to generate such a vast number of messages, the attacker must control a very powerful machine with a fast processor and a lot of available network bandwidth.

For the attack to be successful, it has to overload the target's resources. This means that an attacker's machine must be able to generate more traffic than a target, or its network infrastructure, can handle.

It is often difficult for the attacker to generate a sufficient number of messages from a single machine to overload those resources. However, if he gains control over 10 to 100 thousand machines and simultaneously engages them in generating messages to the victim, together they form a formidable attack network and, with proper use, will be able to overload a well-provisioned victim. This is a distributed denial-of-service—DDoS.

Both DoS and DDoS are a huge threat to the operation of Internet sites, but the DDoS problem is more complex and harder to solve.

First, it uses a very large number of machines. This yields a powerful weapon. Regardless of how well-provisioned it is, any target can be taken offline.

Gathering and engaging a large army of machines has become trivially simple because many automated tools for DDoS can be found on hacker Web pages and in chat rooms. Such tools do not require sophistication to be used and can inflict very effective damage.

A large number of machines gives another advantage to an attacker. Even if the target could identify attacking machines (and there are effective ways of hiding this information), what action can be taken against a network of 100,000 hosts?

The second characteristic of some DDoS attacks that increases their complexity is the use of seemingly legitimate traffic. Resources are consumed by many legitimate-looking messages; when comparing the attack message with a legitimate one, there are frequently no tell-tale features to distinguish them. Since the attack misuses a legitimate activity, it is extremely hard to respond to the attack without also disturbing this legitimate activity.

It is true that the denial of service only last as long as the attack is active, but it sounds devastating if you consider the following two scenarios:

(a) Some sites offer a critical free service to Internet users. For example, the Internet's Domain Name System (DNS) provides the necessary information to translate human-readable Web addresses (such as www.example.com) into Internet Protocol (IP) addresses (such as 192.1.34.140).

All Web browsers and numerous other applications depend on DNS to be able to fetch information requested by the users. Suppose DNS servers are under a DoS attack and cannot respond due to overload. In that case, many sites may become unreachable because their addresses cannot be resolved, even though they are online and fully capable of handling traffic. This makes DNS a part of the critical infrastructure, and other equally important pieces of the Internet's infrastructure are also vulnerable.

(b) Sites that offer services to users through online orders make money only when users can access those services. For example, a large software or books-selling site cannot sell its products to its customers if they cannot browse the site’s Web pages and order products online.

A DoS attack on such sites means a severe loss of revenue for as long as the attack lasts. Prolonged or frequent attacks also inflict long-lasting damage to a site's reputation—customers who cannot access the desired service are likely to take their business to the competition.

Sites whose reputations were damaged may have trouble attracting new customers or investor funding in the future.

Protection mechanisms

Below is a list of some basic protection mechanisms against such kinds of attacks. However, the network administrators should do much work to ensure their effectiveness. Further research is also necessary, coupled with awareness.

Hygiene approaches try to close as many opportunities for DDoS attacks in your computers and networks as possible. The best way to enhance security is to keep your network simple, well-organized, and well-maintained.

Fixing Host Vulnerabilities Some DDoS attacks target a software bug or an error in protocol or application design to deny service. Thus, the first step in maintaining network hygiene is keeping software packages patched and up to date. In addition, applications can also be run in a contained environment and closely observed to detect anomalous behaviour or excess resource consumption. Even when all software patches are applied as soon as they are available, it is impossible to guarantee the absence of bugs in software.

Fixing Network Organization Well-organized networks have no bottlenecks or hot spots that can become an easy target for a DDoS attack. A good way to organize a network is to spread critical applications across several servers located in different subnetworks. The attacker then has to overwhelm all the servers to achieve denial of service. Providing path redundancy among network points creates a robust topology that cannot be easily disconnected. Network organization should be as simple as possible to facilitate easy understanding and management.

Filtering Dangerous Packets. Most vulnerability attacks send specifically crafted packets to exploit a vulnerability in the target. Defences against such attacks at least require inspection of packet headers and often even digging deeper into the data portion of packets to recognize the malicious traffic. However, data inspection cannot be done with most firewalls and routers. At the same time, filtering requires the use of an inline device. When there are features of packets that can be recognized with these devices, there are often reasons against such use. For example, a lot of rapid changes to firewall rules and router ACLs are often frowned upon for stability reasons (e.g., what if an accident leaves your firewall wide open?) Some types of Intrusion Prevention Systems (IPS), which act like an IDS in recognition of packets by signature and then filter or alter them in transit, could be used but may be problematic and/or costly on very high bandwidth networks.

Source validation approaches verify the user's identity before granting his service request. In some cases, these approaches are intended merely to combat IP spoofing. While the attacker can still exhaust the server's resources by deploying a huge number of agents, this form of source validation prevents him from using IP spoofing, thus simplifying DDoS defence. More ambitious source validation approaches to ensure that a human user (rather than DDoS agent software) is at the other end of a network connection, typically by performing reverse Turing tests. Administrators should consider this.

Proof of work

Some protocols are asymmetric—they consume more resources on the server than on the client's side. Those protocols can be misused for denial of service. The attacker generates many service requests and ties up the server's resources. If the protocol is such that the resources are released after a certain time, the attacker simply repeats the attack to keep the server's resources constantly occupied. One approach to protect against attacks on such asymmetric protocols is redesigning the protocols to delay the commitment of the server's resources. The protocol is balanced by introducing another asymmetric step, this time in the server's favour, before committing the server's resources. The server requires proof of work from the client.

Resource allocation. Denial of service is essentially based on one or more attack machines seizing an unfair share of the target's resources. One class of DDoS protection approaches based on resource allocation (or fair resource sharing) approaches seeks to prevent DoS attacks by assigning a fair share of resources to each client. Since the attacker needs to steal resources from legitimate users to deny service, resource allocation defeats this goal.

Hiding. None of the above approaches protects the server from bandwidth overload attacks that clog incoming links with random packets, creating congestion and pushing out legitimate traffic. Hiding addresses this problem. Hiding obscures the server's or the service's location. As the attacker does not know how to access the server, he cannot attack it anymore. The server is usually hidden behind an "outer wall of guards." Client requests first hit this wall, and then clients are challenged to prove their legitimacy. Any source validation or proof-of-work approach can be used to validate the client. The legitimacy test has to be sufficiently reliable to weed out attack agent machines. It also has to be distributed so that the agents cannot crash the outer-wall guards by sending too many service requests. Legitimate clients' requests are then relayed to the server via an overlay network. In some approaches, a validated client may be able to send his requests more directly without going through the legitimacy test for every message or connection. In the extreme, trusted and preferred clients are given a permanent "passkey" that allows them to take a fast path to the service without ever providing further proof of legitimacy. There are clear risks to that extreme. An example hiding approach is SOS